- Home

- Managed Services

- Cyber Security

- Blog

- About Us

We 365 Admin Support, just simplify your IT problems

Call for a free support. +91 96666 59505Platform Partnership

- Who We Help

- Shop

- Contact

- News

HIGHLIGHTS

Table of Contents

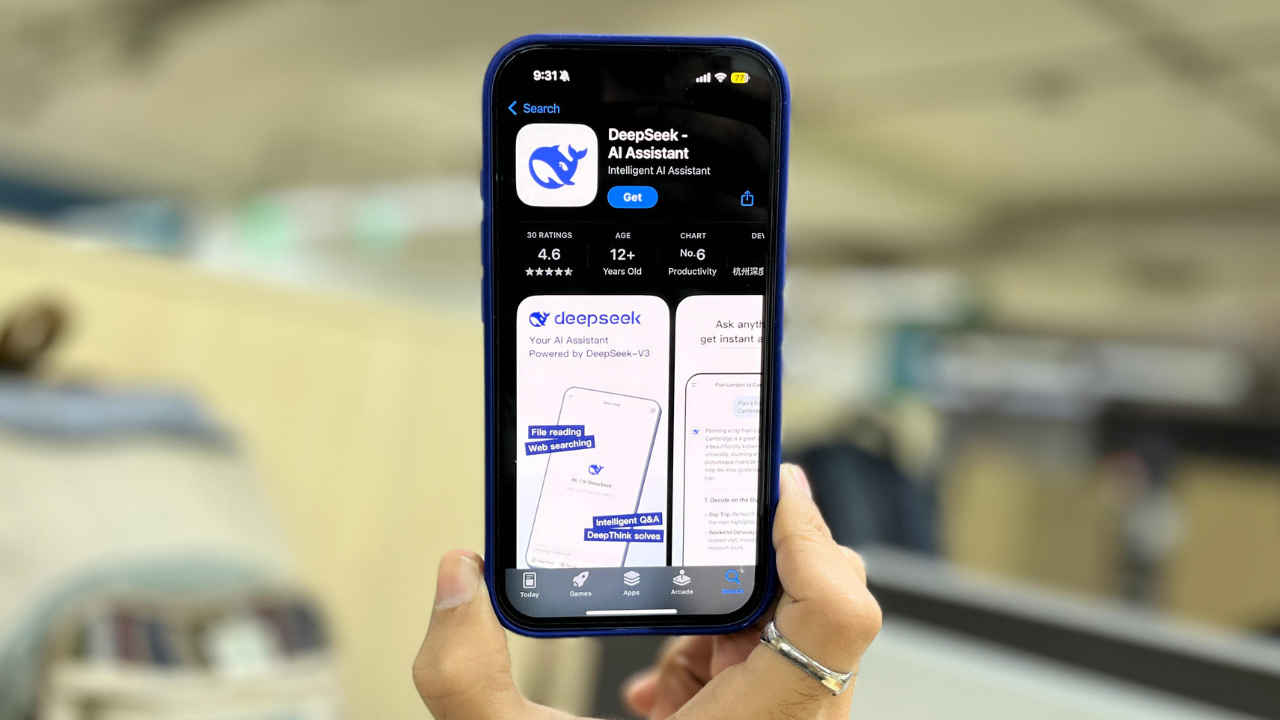

ToggleIf you’ve been seeking an alternative to ChatGPT, you’re in luck. DeepSeek has recently launched DeepSeek-R1, a large language model (LLM) poised to compete fiercely with OpenAI’s o1. What sets DeepSeek-R1 apart is not only its impressive capabilities but also its accessibility; the model is available for free for both personal and commercial use. This move allows developers and researchers to freely access its underlying code and weights. One of DeepSeek-R1’s standout features is its ability to perform complex reasoning tasks and engage in a process of chain-of-thought reasoning without requiring explicit prompts. This positions it as a formidable alternative to OpenAI’s ChatGPT.

DeepSeek-R1 can be seen as an advanced iteration of its predecessor, the DeepSeek-V3-Base. It boasts a colossal number of 671 billion parameters, of which only 37 billion are active at any given moment. This model is adept at managing input contexts of up to 128,000 tokens, giving it the capability to handle extensive reasoning tasks effectively. Moreover, it has undergone fine-tuning that leverages reinforcement learning alongside synthetic datasets, which has significantly enhanced its accuracy. The performance boosts arise from innovative problem-solving techniques that incentivize precise outputs.

For those interested in incorporating this groundbreaking model into their applications, DeepSeek-R1 is accessible via an API. The pricing model is extremely competitive, costing just $0.55 for every million input tokens and $2.19 for every million output tokens. Such pricing makes DeepSeek-R1 a remarkably economical option compared to OpenAI’s o1.

When it comes to performance benchmarks, DeepSeek-R1 has made quite an impact. It outperformed OpenAI’s o1 in 5 out of 11 tested benchmarks, which included challenging assessments such as AIME 2024 and MATH-500. DeepSeek-R1 not only bested OpenAI’s o1 but also demonstrated superior outcomes compared to Anthropic’s Claude 3.5 Sonnet and OpenAI’s GPT-4o in a range of evaluations. Additionally, related models like DeepSeek-R1-Zero and variations distilled from Qwen and Llama exhibited competitive performance, sometimes even eclipsing OpenAI’s o1-mini.

A notable distinction of DeepSeek-R1 is its commitment to transparency in the reasoning process. Unlike OpenAI’s o1, which obscures its operational steps, DeepSeek-R1 showcases a clear reasoning mechanism. This transparency fosters greater trust among users and enables the development of smaller yet highly accurate models through a process known as distillation.

In summary, DeepSeek-R1 represents a significant leap forward in the realm of large language models, not just in capabilities but also in accessibility and affordability. With its advanced reasoning skills, high performance on benchmark tests, and cost-effective pricing structure, it stands as a viable alternative to existing models. For developers, researchers, and organizations looking to harness the power of AI without the high costs typically associated with leading solutions, DeepSeek-R1 may just be the perfect answer.